We earn commission when you buy through affiliate links.

This does not influence our reviews or recommendations.Learn more.

Building one machine learning model is relatively easy.

Creating hundreds or thousands of models and iterating over existing ones is hard.

It is easy to get lost in the chaos.

Bringing order to the chaos requires that the whole team follows a process and documents their activities.

This is the essence of MLOps.

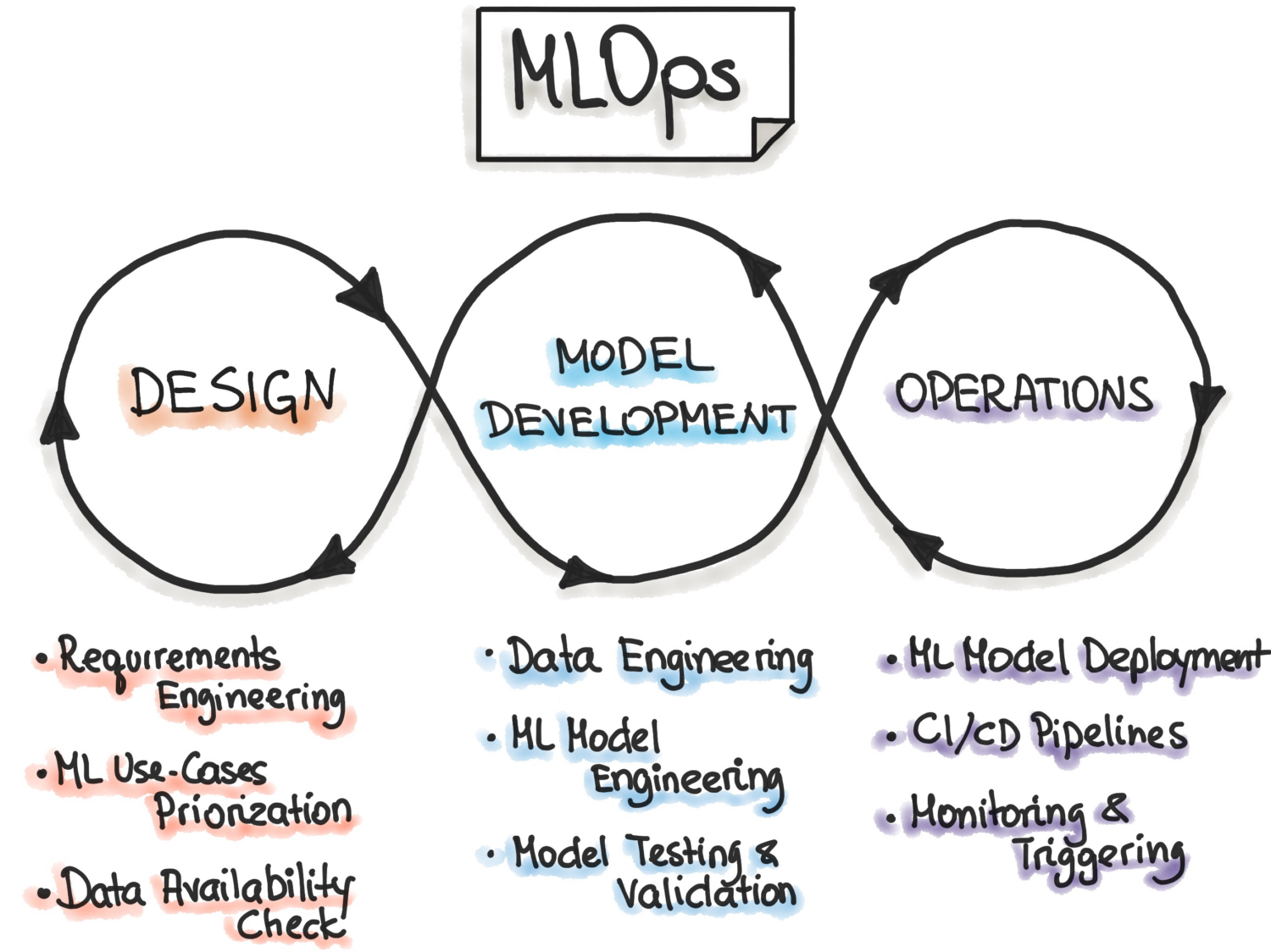

What is MLOps?

Essentially, MLOps is DevOps principles applied to Machine Learning.

Like DevOps, the key idea of MLOps is automation to reduce manual steps and increase efficiency.

Also, like DevOps, MLOps includes both Continuous Integration (CI) and Continuous Delivery(CD).

In addition to those two, it also includes Continuous Training (CT).

The additional aspect of CT involves retraining models with new data and redeploying them.

MLFlow

MLFlowis perhaps the most popular Machine Learning Lifecycle management platform.

It is free and open source.

It provides the following features:

MLFlow integrates with popular machine learning libraries such as TensorFlow andPyTorch.

It also works with different cloud providers such as AWS, Google Cloud, and Microsoft Azure.

Azure Machine Learning

Azure Machine Learningis an end-to-end machine learning platform.

It manages the different machine lifecycle activities in your MLOPs pipeline.

It also integrates with ONNX Runtime and Deepspeed to optimize your training and inference.

Azure Machine Learning integrates with Git and GitHub Actions to build workflows.

It also supports a hybrid or multi-cloud setup.

Google Vertex AI

Google Vertex AIis a unified data and AI platform.

It provides you with the tooling you should probably build custom and pre-trained models.

It also serves as an end-to-end solution for implementing MLOps.

The API enables developers to integrate it with existing systems.

Google Vertex AI also enables you to build generative AI apps using Generative AI Studio.

It makes deploying and managing infrastructure easy and fast.

Databricks

Databricksis a data lakehouse that enables you to prepare and process data.

With Databricks, you’re free to manage the entire machine-learning lifecycle from experimentation to production.

Dataricks provides collaborative Databricks notebooks that support Python, R,SQL, and Scala.

In addition, it simplifies managing infrastructure by providing preconfigured clusters that are optimized for Machine Learning tasks.

For data, SageMaker is capable of pulling data from Amazon Simple Storage Service.

By default, you get implementations of common machine learning algorithms such as linear regression and image classification.

Alternatively, you might opt for a code-first approach and implement models using custom code.

DataRobot comes with notebooks to write and edit the code.

Using the GUI, it’s possible for you to track the experiments of your models.

Run AI

Run AIattempts to solve the problem of underutilization of AI infrastructure, in particular GPUs.

It solves this problem by promoting the visibility of all infrastructure and making sure it is utilized during training.

To perform this, Run AI sits between your MLOps software and the firms hardware.

While occupying this layer, all training jobs are then run using Run AI.

The platform, in turn, schedules when each of these jobs is run.

It provides a layer of abstraction to Machine Learning teams by functioning as a GPU virtualization platform.

you’re able to run tasks from Jupyter Notebook, bash terminal, or remote PyCharm.

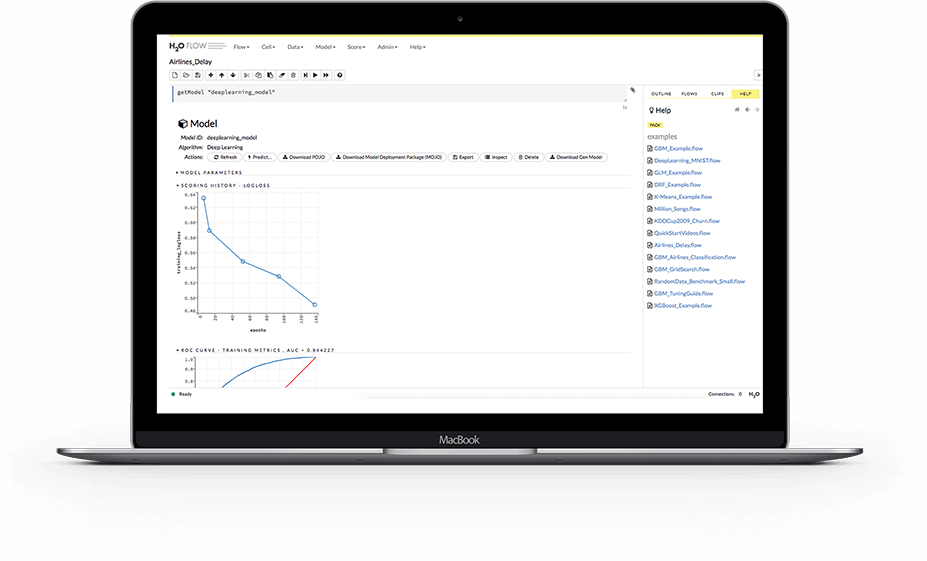

H2O.ai

H2Ois an open-source, distributed machine learning platform.

As an MLOps platform, H20 provides a number of key features.

First, H2O also simplifies model deployment to a server as a REST endpoint.

It provides different deployment topics such as A/B Test, Champoion-Challenger models, and simple, single-model deployment.

During training, it stores and manages data, artifacts, experiments, models, and deployments.

This allows models to be reproducible.

It also enables permission management at group and user levels to govern models and data.

While the model is running, H2O also provides real-time monitoring for model drift and other operational metrics.

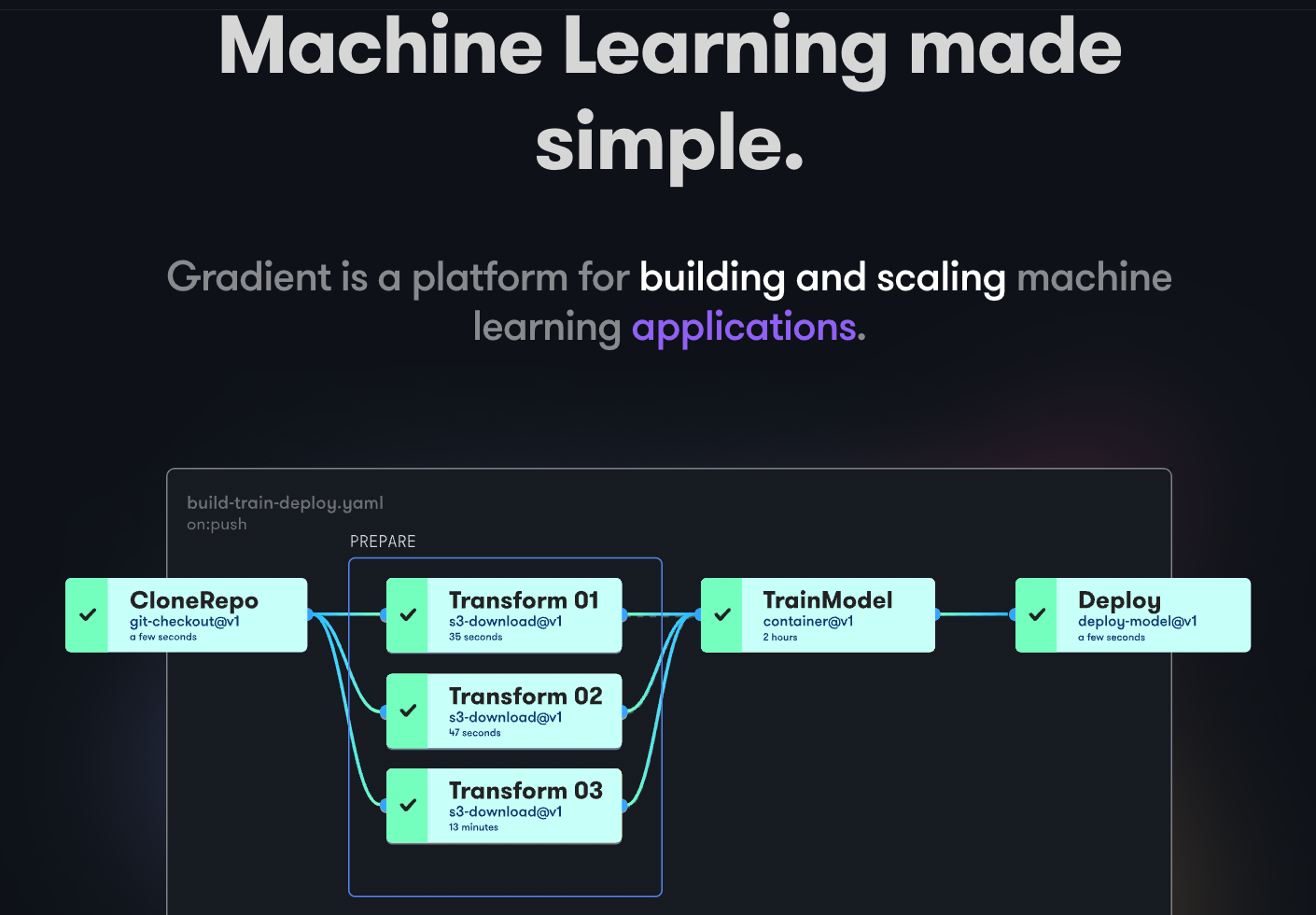

Paperspace Gradient

Gradienthelps developers at all stages of the Machine Learning development cycle.

It provides Notebooks powered by the open-source Jupyter for model development and training on the cloud using powerful GPUs.

This allows you to explore and prototype models quickly.

Deployment pipelines can be automated by creating workflows.

These workflows are defined by describing tasks in YAML.

Using workflows makes creating deployments and serving models easy to replicate and, therefore, scaleable as a result.

Your pipelines run on Gradiet clusters.

These clusters are either on Paperspace Cloud, AWS, GCP, Azure, or any other servers.

you could interact with Gradient using CLI or SDK programmatically.

Next, you may want to read our comparison ofDataricks vs. Snowflake.