We earn commission when you buy through affiliate links.

This does not influence our reviews or recommendations.Learn more.

If youre interested in AI, this article will certainly help you dive deep into its intricacies.

The prime listicle embedded within this article serves as a guide towards achieving this goal.

What is LLMOps?

LLMOps stands for Language Model Operations.

Its about managing, deploying, and improving large language models like those used in AI.

Although LLMs are easy to prototype, using them in commercial products poses challenges.

LLMOps covers this cycle, ensuring smooth experimentation, deployment, and enhancement.

The LLMOps platform fosters collaboration among data scientists and engineers, aiding iterative data exploration.

It enables real-time co-working, experiment tracking, model management, and controlled LLM deployment.

LLMOps automates operations, synchronization, and monitoring across the ML lifecycle.

How does LLMOps work?

LLMOps platforms simplify the entire lifecycle of language models.

They centralize data preparation, enable experimentation, and allow fine-tuning for specific tasks.

These platforms also facilitate smooth deployment, continuous monitoring, and seamless version transitioning.

Collaboration is promoted, errors are minimized through automation and ongoing refinement is supported.

In essence, LLMOps optimizes language model management for diverse applications.

Benefits of LLMOps

The primary advantages I find significant include efficiency, accuracy, and scalability.

Now, lets move forward to our list of platforms.

These applications arent just user-friendly; theyre set for ongoing enhancement.

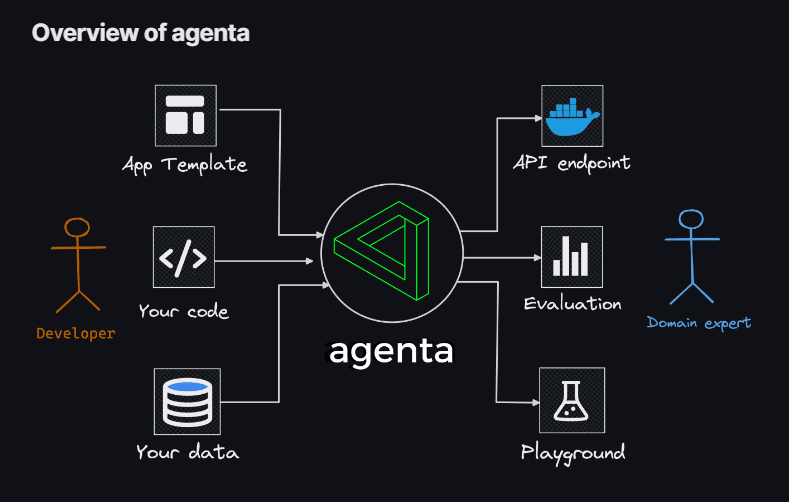

With Agenta, you could swiftly experiment and version prompts, parameters, and intricate strategies.

This encompasses in-context learning with embeddings, agents, and custom business logic.

Furthermore, Agenta fosters collaboration with domain experts for prompt engineering and evaluation.

Another highlight is Agentas capability to systematically evaluate your LLM apps and facilitate one-click deployment of your app.

Phoenix

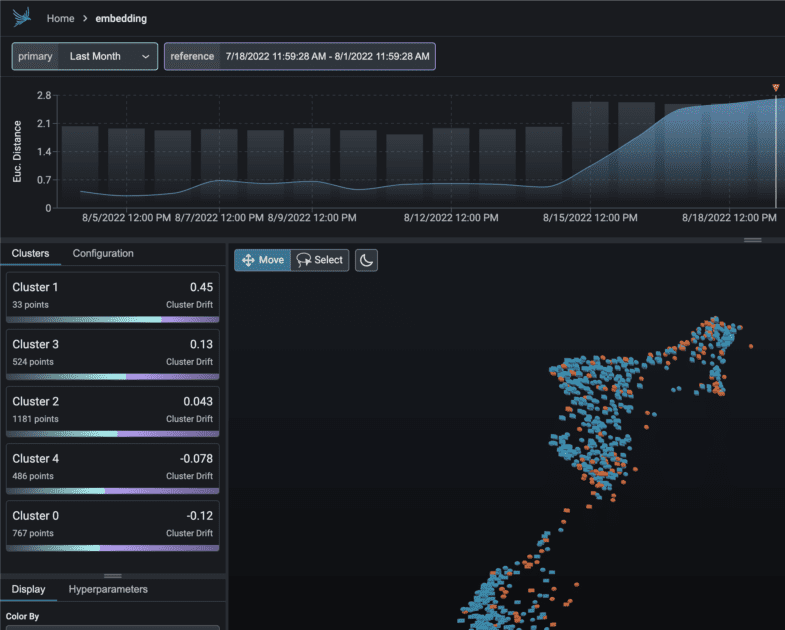

Embark on an instant journey into MLOps insights powered byPhoenix.

Elevate your models with the unmatched capabilities that Phoenix brings to the table.

LangKit

LangKitstands as an open-source toolkit for text metrics designed to monitor large language models effectively.

The countless potential input combinations, leading to equally numerous outputs, pose a considerable challenge.

The unstructured nature of text further complicates matters in the realm of ML observability a challenge that merits resolution.

After all, the absence of insight into a models behavior can yield significant repercussions.

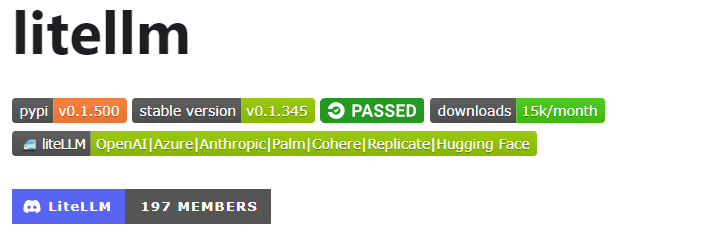

It translates inputs to the relevant providers completion and embedding endpoints, ensuring uniform output.

Additionally, the package includes an exception mapping feature.

It aligns standard exceptions across different providers with OpenAI exception types, ensuring consistency in handling errors.

All these captivating functionalities converge through theLLM-App.

I am presenting Pathways LLM-App a Python library meticulously designed to accelerate the development of groundbreaking AI applications.

This exceptional asset empowers you to deliver instantaneous responses that mirror human interactions when addressing user queries.

It accomplishes this remarkable feat by effectively drawing on the latest insights concealed within your data sources.

The complexity can be amplified in real-world scenarios due to intricate relationships between prompts and LLM calls.

The creators of LLMFlows envisioned an explicit API that empowers users to craft clean and understandable code.

This API streamlines the creation of intricate LLM interactions, ensuring seamless flow among various models.

LLMFlows classes provide users with unbridled authority without hidden prompts or LLM calls.

Promptfoo

Accelerate evaluations through caching and concurrent testing usingpromptfoo.

It provides command-line interface (CLI) and a library, empowers the assessment of LLM output quality.

Moreover, promptfoo enables systematic testing of prompts against predefined test cases.

This aids in evaluating quality and identifying regressions by facilitating direct side-by-side comparison of LLM outputs.

This means your projects can move from the idea stage to being ready for action more smoothly.

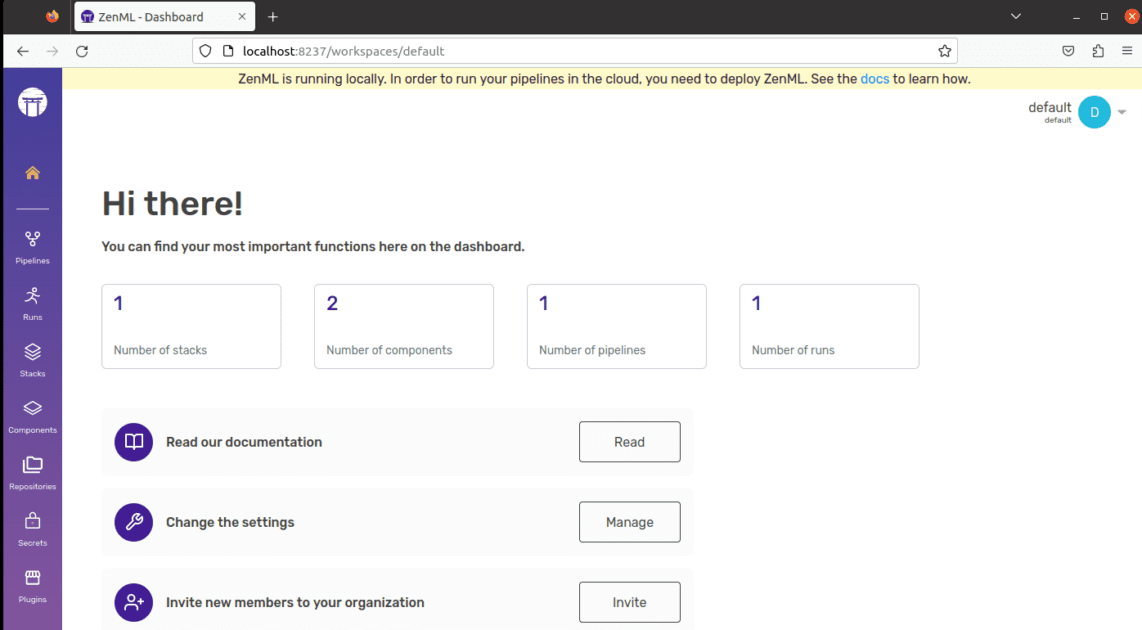

ZenML is made for everyone whether youre a professional or part of an organization.

Just write your code once and use it easily on other systems.

Your selection holds the power to shape your path, so choose wisely!

You may also explore someAI Tools for developersto build apps faster.